The Gerrit spring hackathon just ended on Discord, with GerritForge attending from London, SAP, Google from Germany, and WikiMedia from France). One of the PoC we have been working on is a prototype for a scalable and “intelligent” repository optimizer.

Following last year’s release of the git-repo-metrics plugin, presented in the previous users summit, which tracks live information on Git repositories, we thought that having a tool that can “automagically” do something with the collected data would be helpful.

We started working on, what we called, the RepoVet©, a modular tool that can make intelligent and autonomous decisions on what needs to be improved on a repository.

Architecture

The main constraints we aimed for were:

- Git server implementation agnostic: we want the tool to be usable on any Git repository, not necessarily one managed by Gerrit Code Review

- Modular: the different components of the tool must be independent and pluggable, giving a chance to integrate into already existing Git server Setups.

After a couple of whiteboard rounds, we developed the following components: Monitor, RuleEngine, and Optimizer.

Each is independent, highly configurable, and communicates with the other components via a message broker (AWS SQS). Following is a list of the responsibility of each of them:

- Monitor: watch the filesystem and notify for activities happening in the git repository, i.e., increase/decrease of repository size

- RuleEngine: listens for notifications from Monitor and decides whether any activity is needed on the repository, i.e., a git GC, a git repack, etc. The decision can be based not only on the repository parameters (number of loose objects, number of refs, etc.) but also, for example, on traffic patterns. If RuleEngine decides an optimization is needed, it will notify the Optimizer.

- Optimizer: listen for instructions coming from the RuleEngine and execute them. This can be a git GC, a git repack, etc. It is not its call to decide which activity to carry on. However, it will determine if it is the right moment. For example, it will only run concurrent GCs or do any operation if there are enough resources.

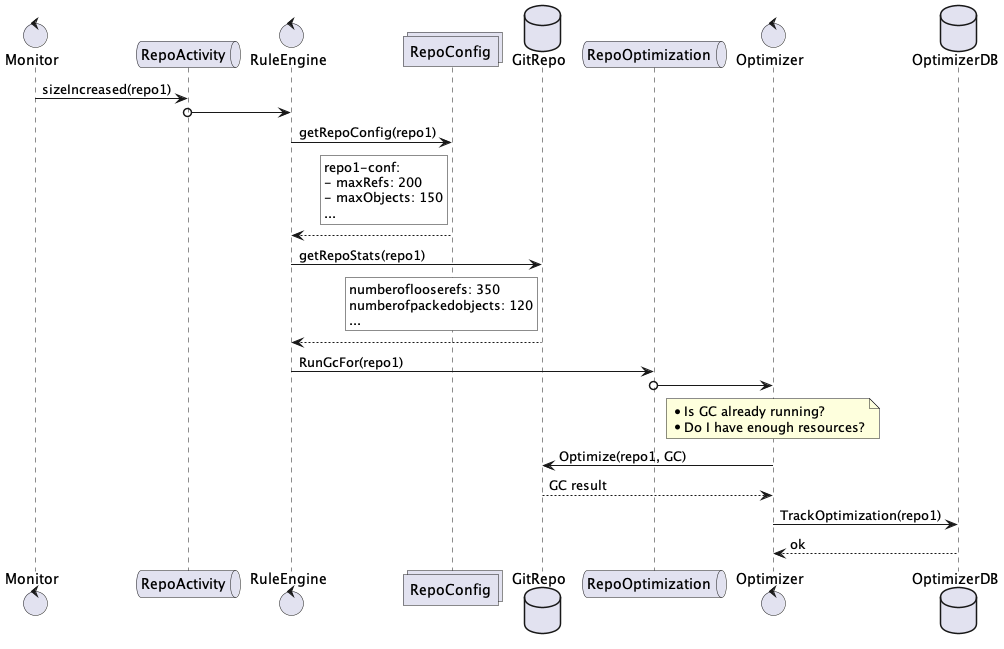

Following is an example of interaction among the components, where the decision to run a GC is based on some thresholds set in the repository configuration:

In the above example, Monitor reports an increase in the repository size and notifies the RuleEngine via the broker RepoActivity queue.

RuleEngine gets the repository configuration and decides a GC is needed since some thresholds were exceeded. It notifies the operation type and the repository to the Optimizer via the broker RepoIntervention queue.

Optimizer checks if there are other GC currently running and if there are enough resources and then runs the GC and keeps track of its result and timestamp.

As it is possible to see, we met the criteria we initially aimed for since:

- None of the components needs or use Gerrit, even though the repository was hosted in a Gerrit Code Review setup

- Components are independent and swappable. For example, if we used Gerrit, the RepoMonitor could be swapped with a plugin acting as a bridge between Gerrit stream events and the broker.

Lessons learned

- Having low coupling among the different components will allow:

- The user to pick only the components needed in their installation

- The user to integrate the tool into a pre-existing infrastructure

- The developers to potentially work with different technologies and different lifecycles

- The user to pick only the components needed in their installation

- SQS proved to be straightforward to work with during the prototyping phase, allowing to spin up the service locally with Docker quickly

- Modeling the messages among the components is crucial and has to be carefully thought-out at the beginning

- More planning needs to be spent in choosing the broker system; for example, handling non-processable messages and managing DLQs hasn’t been considered at all

Next steps

We are aiming to start working on an MVP as soon as possible. Maybe starting from one of the components and slowly adding the others.

As soon as we have an MVP, as usual, the code will be available; just waiting for contributions and feedback.

Traditionally, we will use gerrithub.io to dogfood it, and we will report back.

Stay tuned!