Performance has always been one of the core features of Gerrit, after all this was a topic very dear to the project’s co-founder, Shawn Pearce, who always use to say “Performance is a feature”. It is no surprise then that when there is a plugin that allows you to speed up some of the most important features of Gerrit, like clones/fetches, change creation, change submission, ACL evaluation, it catches our attention. So let’s dive into how Cached-RefDB works and how it achieves such incredible results.

Before we get started though, you can also watch the talk I gave on this topic at:

The Issue

Our story starts with the apparently unrelated Change 260992. Digging back all the way to 2020, in this change our colleagues at Google highlight something that is crucially important:

Data on googlesource.com suggests that we spend a significant amount of time loading accounts from NoteDb. This is true for all Gerrit installations, but especially for distributed setups or setups that restart often.

Since Gerrit 3.x introduced NoteDB, pretty much any operation in Gerrit needs to read or write to disk, since everything is stored in Git itself. This can become extremely heavy; therefore, it becomes highly crucial to cache code-paths that are executed often. And very few code paths are executed more often than accessing a user’s details, which include ACLs, which are essential for deciding what operations the user can perform anywhere in the platform.

As part of said change, Google introduced an extremely interesting approach to cache-eviction where, by virtue of adding the ObjectId to the cache key, cache entries would be automatically invalidated when an account is updated, without the need to explicitly propagate cache evictions, which comes at a significant cost.

To do this, they modified the AccountCacheImpl to look like this:

public ImmutableMap<Account.Id, AccountState> get(Collection<Account.Id> accountIds) { [...] try (Repository allUsers = repoManager.openRepository(allUsersName)) { Set<CachedAccountDetails.Key> keys = Sets.newLinkedHashSetWithExpectedSize(accountIds.size()); for (Account.Id id : accountIds) { Ref userRef = allUsers.exactRef(RefNames.refsUsers(id)); if (userRef == null) { continue; } keys.add(CachedAccountDetails.Key.create(id, userRef.getObjectId())); }[...]We can see here that, for every operation, to determine whether the cache entry corresponds to the latest state on disk, we actually need to open the repository and retrieve the exact ref for that user to find the ObjectId.

This immediately raises a few eyebrows. In order to not access the disk, we’re constantly accessing the disk, a bit of a nonsense, isn’t it?

The whole background to this can be found at Issue 40014084, but for the purposes of this conversation, what we care about is Luca’s comment where he reports a huge performance regression, where the Accounts Cache latency goes from 0.000196ms (a number that I even struggle to pronunce) to 0.5ms, a huge equivalent to 2600 times slower, you read that right: 2600 TIMES SLOWER! Mad!

To further compound on the issue, as mentioned before, this cache is used almost everywhere in Gerrit, with simple installations easily calling this method thousands of times per second. 2600x slower, thousands of times over, and the recipe for disaster is complete.

How was this not caught before?

One might wonder how Google didn’t catch such a performance regression in their performance testing, and that would be a very fair question. Well, turns out that in their internal JGit installation, these calls were actually cached at the repository level, so whenever we open the repository and call exactRef, they weren’t really interacting with the disk but with a cache layer that non-googlers didn’t have access to.

This poses the question: What are non-googlers supposed to do to get around this issue?

Cached-RefDB – The solution

The answer to this question is, in my opinion anyway, Cached-RefDb.

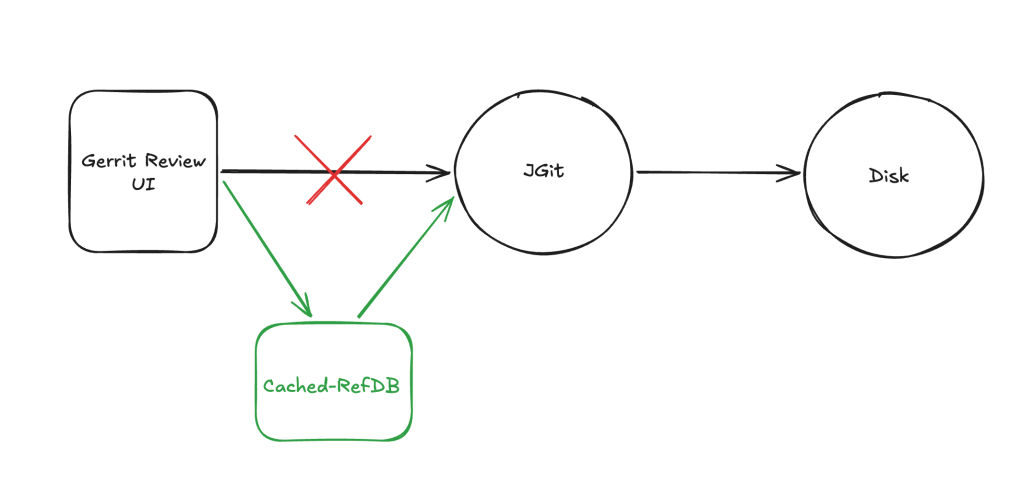

What this plugin does is extremely simple in concept: it makes calls to the RefDatabase and injects a cache layer before we go to actually check on disk. If we found the element in the cache, great; otherwise, we delegate to the provided implementation of the RefDatabase, incredibly simple in concept, but incredibly powerful in practice.

And, to be clear, as mentioned above, this doesn’t just impact Accounts cache access; virtually any operation can benefit from using this new cache layer, including your CI/CD clones and fetches, as well as your developers’ pushes and change submissions. CRAZY, CRAZY GAINS!

As the injected cache is by all means like any other Gerrit cache, the eviction is handled seamlessly by Gerrit’s internal mechanism’s.

Let the numbers speak – Benchmarking

Let’s now see how a vanilla Gerrit compares to a Gerrit with cached-refdb installed.

First: the testing field

We used our local testing environment, the same one we use when we need to really push Gerrit. It’s composed of a physical machine with:

– 128 CPUs

-128GB of Memory

– Intel(R) Xeon(R) Gold 6438Y+ processors

– local SSD disk.

For the repository, the primary metric we’re interested in is the number of refs. We used a repository with 1.5 million refs, big, but not huge. If your repository has very few refs and many large binary objects, you’re unlikely to see the kind of performance improvements I’m about to present.

And finally the config.

For the non-cached-refdb scenario, we first used a plain-vanilla Gerrit, whereas in the cached-refdb scenario it’s crucial to set core.usePerRequestRefCache = false in your etc/gerrit.config. This is because this setting introduces a per-thread, in-memory cache for each operation which causes scenarios where Gerrit can go into split-brain even if running a single node installation. At GerritForge we usually recommend disabling this setting anyway, but as it does provide a performance boost, even if at the cost of potentially serious issues, and we wanted to test against the fastest vanilla Gerrit that we could, we leaned towards enabling it for the purpose of the test.

Another important setting to look at is in your jgit.config, with:

[core]trustFolderStats=always (default)(DEPRECATED - now trustStat)We’ll revisit the meaning of this setting in a minute, but for now, it’s sufficient to know that this is the default setting and also the one that guarantees the best performance from a jgit perspective; more on this later.

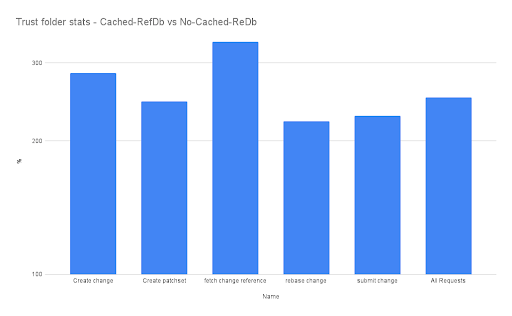

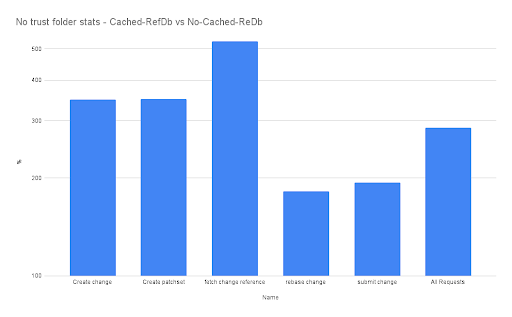

In this graph, we see how much quicker, in percentage, Gerrit with Cached-RefDB is compared to vanilla Gerrit. We can see how essential user journeys can be to gain a massive advantage from using cached-refdb, improving performance by 2 to 3 times! This is an enormous increase in a world where we often aim for a few percentage point improvements, suddenly delivering 2 to 3 times the performance is HUGE.

But what about resource utilization I hear you ask?

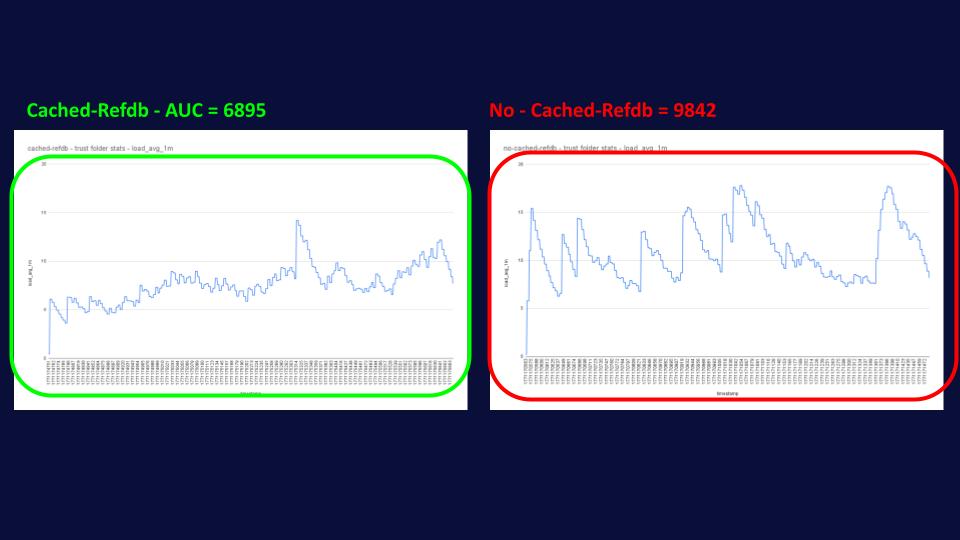

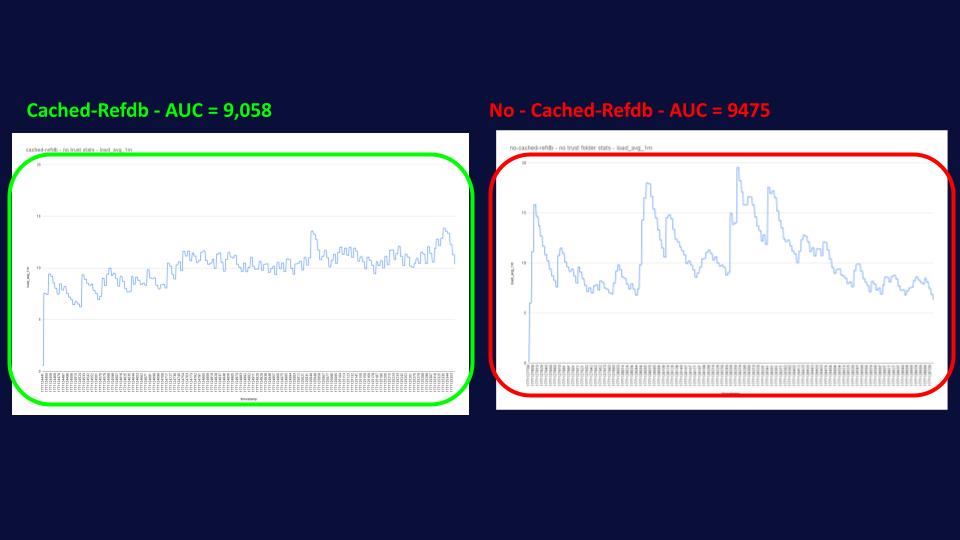

Well, that’s where it gets really interesting, as you can see from the graph below, cached-refdb delivers this massive performance boost, while using fewer resources and in a more predictable way, reducing spikes in system load and helping admins with their capacity planning.

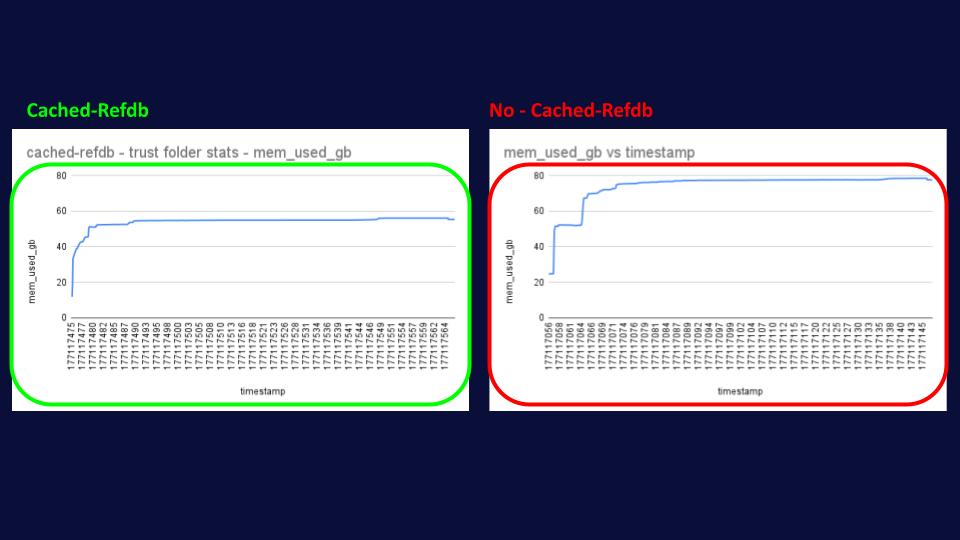

As if all of the above wasn’t enough, we also observed a HUGE reduction in memory utilization of up to 25%, going from 80GB in the no-cached-refdb simulation to only 60GB in the cached refdb. This might sound a little bit counterintuitive, as we’re allocating more memory for cached-refdb as we need to store an extra cache; however, what’s happening behind the scenes is that without cached-refdb, JGit needs to allocate and deallocate objects much more

frequently, causing greater fragmentation in the heap memory that clearly Java isn’t able to GC as efficiently as in the other scenario.

But let’s go back to our configs, in particular `trustFolderStats`, I promised you more details, and here they are. This setting controls when JGit reloads files from disk. By setting it to always, we tell JGit to assume the stats in memory for the file are correct and not reopen it. That’s fine for a single-node installation, since no other processes should be modifying the files on disk, so JGit can assume the latest information it has for them is the current one. When we move to highly available installations, though,

In particular, the high-availability plugin, this assumption no longer holds true, as multiple Gerrit nodes are writing to disk without the others knowing.

This means that if you’re running Gerrit with a high-availability setup, it’s imperative that you have the following configuration in your jgit.config:

[core] trustFolderStats=false (DEPRECATED - now trustStat) trustPackedRefsStat=after_openThis tells JGit to never assume that the file hasn’t changed and always go and read it from disk, unless it’s trying to read the packed-refs file. In that case, it can trust that it’s the up-to-date version while it keeps it open, but if it closes it, it’ll need to go and re-read it. That’s because operations on the packed-ref are locking; therefore, there’s less risk of another process modifying it while you hold the lock.

Overall, these settings can have huge performance impacts on Gerrit, potentially seriously affecting throughput and system load.

It’s in this scenario that cached-refdb once again shines, helping JGit maintain the same level of disk access as before. In this case, we can see that the difference in latency between the cached-refdb and the vanilla Gerrit with this setting is even greater, with common operations now being 3 to 5 times quicker!

3 TO 5 TIMES! THAT’S HUGE!

And again, we can observe that the system load for Gerrit with cached-refdb hasn’t changed much, while the one without cached-refdb is even a bit more spiky than before.

Regarding memory consumption, as expected, the profile is exactly the same as above, with cached-refdb using 25% less memory than its counterpart.

Conclusion

All in all, cached-refdb is a relatively small plugin, especially given the HUGE performance boost it can provide for your Gerrit installation. It’s available for Gerrit 3.2 and later, so it can cater to a wide range of modern Gerrit versions.

We encourage you to try this out. You can download the plugin from the CI at [1]. Please note that if you use it with Gerrit 3.13+ and your company’s revenue exceeds $5 million, a license from GerritForge is required. You can get in touch at sales@gerritforge.com to know more.